… and explained by a former England captain, no less.

Statistics, Cricket and Umpire’s Call

… and explained by a former England captain, no less.

… and explained by a former England captain, no less.

C.R. Rao passed away earlier this week, and this is a name that is familiar to anybody who has completed a course in statistics at the Masters level. Well, ought to be familiar, at any rate. And if you ask me, even undergraduate students ought to be familiar with both the name, and the many achievements of India’s best statistician.

Yes, that is a tall claim, but even folks who might disagree with me will admit that C.R. Rao makes for a very worthy contender. (And if you do disagree, please do tell me who your candidate would be.)

Here’s a lovely write-up about C.R. Rao from earlier on this year:

Professor C R Rao, the ageless maestro in mathematical statistics, was recently in the news because he received the 2023 International Prize in Statistics. The news made headlines because the Prize was called the ‘Nobel Prize in Statistics’.

https://bademian.wpcomstaging.com/2023/05/24/the-rao-centuplicate/

There’s no Nobel Prize in Statistics, but if there had indeed been one, Calyampudi Radhakrishna Rao would have got it long ago. Perhaps in 1960.

C R Rao entered the field of statistics when it wasn’t sufficiently ‘mathematical’. Classical statistics, at that point, was about gathering data, obtaining counts and averages, and estimating data variability and associations.

Think, for example, of the mammoth census exercise. Rao was among the first to bring serious mathematics into the mix. He asked questions like: When does a ‘best’ statistical estimate exist? If it does, how can we mathematically manoeuvre to make a given estimate the best? In situations where no best estimate exists, which among the candidate estimates is the most desirable?

By the way, the very last anecdote in this post was my favorite, so please make sure you read the whole thing.

You could argue for days about which of his many contributions were the most important, but there will be very little disagreement about which is the most famous: the Cramer-Rao Lower Bound.

In estimation theory and statistics, the Cramér–Rao bound (CRB) relates to estimation of a deterministic (fixed, though unknown) parameter. The result is named in honor of Harald Cramér and C. R. Rao.

https://en.wikipedia.org/wiki/Cram%C3%A9r%E2%80%93Rao_bound

Plow through the article if you like, but I would advise you against it if you are unfamiliar with statistical theory, and/or mathematical notation. You could try this on for size instead:

What is the Cramer-Rao Lower Bound?

https://www.statisticshowto.com/cramer-rao-lower-bound/

The Cramer-Rao Lower Bound (CRLB) gives a lower estimate for the variance of an unbiased estimator. Estimators that are close to the CLRB are more unbiased (i.e. more preferable to use) than estimators further away. The Cramer-Rao Lower bound is theoretical; Sometimes a perfectly unbiased estimator (i.e. one that meets the CRLB) doesn’t exist. Additionally, the CRLB is difficult to calculate unless you have a very simple scenario. Easier, general, alternatives for finding the best estimator do exist. You may want to consider running a more practical alternative for point estimation, like the Method of Moments.

But even this, I would say, isn’t a great way to make oneself familiar with the subject. Try your luck with YouTube, maybe? Lots of very good videos in there, but none that I would call simple enough.

Which, of course, brings us to ChatGPT.

Here’s what I started with:

“Give me the simplest possible explanation of what the cramer-rao lower bound is, why it is important, and one example of a simple application. Thank you!”

Your mileage may will vary, because you might end up using ChatGPT 3.5. Even if you were to use ChatGPT4, there’s no guarantee that the answer will be the same. It will, in fact, almost certainly be different. Bu that’s fine! Copy this prompt and run it.

This was my follow-up question:

“Lovely, this was helpful. Can you walk me through its theoretical derivation? Keep the explanation as simple as possible, and note that I am not very familiar with matrix algebra.”

I wanted as simple an explanation as possible from ChatGPT, which is why I added the bit about not being familiar with matrix algebra. You could say you’re not at all familiar with it. You could say you’re not familiar with statistics, or mathematical notations, or any other qualifiers you think are necessary. Don’t be shy about telling ChatGPT what you don’t know – this is important because it will tailor its answers accordingly.

This is what I went with next:

“Now imagine I have to explain this to, say, a very motivated 12 year old. This student is very willing to learn, but is unfamiliar with statistical concepts. She has, in fact, had no classes in statistics at all, but has learnt the basic concepts in statistics, and wants to run a real life experiment. In my conversations with her, the CRLB came up, and she wanted to know more about it. How would you explain the derivation of the CRLB to her?”

No twelve year old student asked me this question, to be clear. But this is just one of many, many ways in which you can try and get ChatGPT to simplify stuff for you. This answer is worth pasting in full:

“Alright, let’s use a more intuitive approach!

Setting the Scene: Imagine you’re trying to guess the number of candies in a jar. Each time you guess, you might be a little too high or a little too low, but on average, you’re pretty close. The CRLB is like a magic number that tells you the smallest amount you can be off by, on average, no matter how good your guessing strategy is.Step-by-Step Explanation:

Conclusion: So, when you’re trying to guess the number of candies in the jar, the CRLB is like a challenge. It’s the universe saying, “Bet you can’t guess better than this!” And if you can guess with an error smaller than that magic number, then you’re doing an amazing job! If not, maybe there’s a better way to use your clues.”

Much better. But I had questions!

“Is it possible for the guess to be lower than the CRLB? If yes, I’m confused about why it should be called the “lower bound””

Here is its answer:

“Great question! Let’s clarify this.The Cramér-Rao Lower Bound (CRLB) is a theoretical lower bound on the variance (a measure of how spread out the estimates are) of any unbiased estimator. It doesn’t directly bound the error of a single guess or measurement. Instead, it bounds the average squared error (variance) of an estimator over many, many guesses or measurements.In our candy jar example, the CRLB is used in a slightly simplified and illustrative manner. Here’s what it means:

So, when we say “Try to get within 5 candies of the actual number” in the game, it’s a simplification for illustration. In a real-world application of the CRLB, it’s about the average performance of an estimator, not the performance on a single estimate.In essence, while individual errors can be below the CRLB, the average squared error of a good estimator shouldn’t be below the CRLB. If it is, either the estimator is biased, or there’s some other issue with the assumptions or calculations.”

Next obvious (to me, at any rate) step is to ask it to come up with a working demo:

“Great! Can you help me build a very small demo of this experiment you just described in javascript that I can load as an HTML page?”

What a time to be alive! If you are learning statistics for the very first time, and especially if you have (in your opinion) a not-so-great stats prof, you can now make the world’s best lemonade from the lemon you’ve been handed! Here is its answer:

“Of course! Let’s create a simple demo where:

Here’s a basic HTML page with embedded JavaScript for the demo it came up with:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Candy Guessing Game</title>

</head>

<body>

<h2>Candy Guessing Game</h2>

<p>Guess the number of candies in the jar (between 1 and 100):</p>

<input type="number" id="guess" min="1" max="100">

<button onclick="checkGuess()">Submit Guess</button>

<p id="feedback"></p>

<p id="crlb">Cramér-Rao Lower Bound (CRLB): 5 (This is our magic number! Try to get within 5 candies of the actual number.)</p>

<script>

// Randomly determine the number of candies in the jar

let candies = Math.floor(Math.random() * 100) + 1;

function checkGuess() {

let guess = document.getElementById("guess").value;

let difference = Math.abs(guess - candies);

if (difference === 0) {

document.getElementById("feedback").innerText = "Amazing! You guessed it exactly right!";

} else if (difference <= 5) { // Using 5 as our CRLB for simplicity

document.getElementById("feedback").innerText = "Great job! You're within the magic number! The jar has " + candies + " candies.";

} else {

document.getElementById("feedback").innerText = "Keep trying! The jar has " + candies + " candies. You were off by " + difference + ".";

}

}

</script>

</body>

</html>

What do you do with this code? Here’s ChatGPT again:

“You can save the above code as an .html file and open it in a web browser. The user can input their guess, and upon submission, they’ll receive feedback on how close their guess was to the actual number of candies. The CRLB is set to 5 for simplicity, so if the user’s guess is within 5 candies of the actual number, they’re doing great!”

What’s the point of having learnt an idea if you can’t discuss it in class or with like-minded friends, batchmates and peers? And so I had one final question for the best research assistant I’ve ever had:

“Thank you! One last question. Can you please give me five good questions regarding the CRLB that I can either ask in class, or use as a starting point for discussions with my batchmates or seniors. Please optimize the questions for conceptual clarity. That is, our attempts to answer these questions should make the concept, its applicability and its importance clearer to us.”

“Certainly! Here are five thought-provoking questions regarding the Cramér-Rao Lower Bound (CRLB) that can stimulate discussion and deepen understanding:

These questions are designed to encourage exploration of the foundational concepts behind the CRLB, its practical applications, and its relationship with other statistical tools and concepts. Discussing them should provide a more comprehensive and nuanced understanding of the CRLB and its significance in the field of statistics.”

There is always at least one student who says something along the lines of “No way can my college prof answer these, lol”, or “No way are my friends going to want to talk about this”, or “<Insert variant of choice here>”

Well, what about feeding these back to ChatGPT?

I’ve waited for years to write this sentence, and fellow students of econ will allow me to savor my moment of sweet, sweet revenge.

This is left as an exercise for the reader.

Honor the great man’s work by learning about it, and with ChatGPT around, you have no excuses left. Get to it! Oh, and by the way, the meta lesson, of course, is that you can do this with all of statistics – and other subjects besides.

Haffun!

If you’ve been reading the posts of the past few days, I invite you to watch this video carefully, and ask yourselves which parts of it you agree with, which parts you disagree with, and why.

Back when I used to work at the Gokhale Institute, I would get a recurring request every year without fail. What request, you ask? To get AB InBev to come on campus. To the guys at AB InBev – if you’re reading this, please do consider going to GIPE for placements. The students are thirsty to, er, learn.

But what might their work at AB InBev look like?

I don’t know for sure, but it probably will not involve working with barley and hops. It should, though, if you ask me, because today, building statistical models about other aspects of selling beer today might rake in the moolah. But there will be a pleasing historical symmetry about using stats to actually brew beer.

You see, you can’t – just can’t – make beer without barley and hops. And to make beer, these two things should have a number of desirable characteristics. Barley should have optimum moisture content, for example. It should have high germination quality. It needs to have an optimum level of proteins. And so on. Hops, on the other hand, should have beefed up on their alpha acids. They should be brimming with aroma and flavor compounds. There’s a world waiting to be discovered if you want to be a home-brewer, and feel free to call me over for extended testing once you have a batch ready. I’ll work for free!

But in a beer brewing company, it’s a different story. There, given the scale of production, one has to check for these characteristics. And many, many years ago – a little more than a century ago, in fact – there was a guy who was working at a beer manufacturing enterprise. And this particular gentleman wanted to test these characteristics of barley and hops.

So what would this gentleman do? He would walk along the shop-floor of the firm he worked in, and take some samples from the barley and hops that was going to be used in the production of beer. Beer aficionados who happen to be reading this blog might be interested to know the name of the firm bhaisaab worked at. Guinness – maybe you’ve heard of it?

So, anyway, off he’d go and test his samples. And if the results of the testing were encouraging, bhaisaab would give the go-ahead, and many a pint of Guinness would be produced. Truly noble and critical work, as you may well agree.

But Gosset – for that was his name, this hero of our tale – had a problem. You see, he could never be sure if the tests he was running were giving trustworthy results. And why not? Well, because he had to make accurate statements about the entire batch of barley (and hops). But in order to make an accurate statement about the entire batch, he would have liked to take larger samples.

Imagine you’re at Lasalgaon, for example, and you’ve been tasked with making sure that an entire consignment of onions is good enough to be sold. How many sacks should you open? The more sacks you open, the surer you are. But on the other hand, the more sacks you open, the lesser the amount left to be sold (assume that once a sack is open, it can’t be sold. No, I know that’s not how it works. Play along for the moment.)

So how many sacks should you open? Well, unless your boss happens to be a lover of statistics and statistical theory for its own sake, the answer is as few as possible.

The problem is that you’re trying to then reach a conclusion about a large population by studying a small sample. And you don’t need high falutin’ statistical theory to realize that this will not work out well.

Your sample might be the Best Thing Ever, but does that mean that you should conclude that the entire population of barley and hops is also The Best Thing Ever? Or, on the other hand, imagine that you have a strictly so-so sample. Does that mean that the entire batch should be thought of as so-so? How to tell for sure?

Worse, the statistical tools available to Gosset back then weren’t good enough to help him with this problem. The tools back then would give you a fairly precise estimate for the population, sure – but only if you took a large enough sample in the first place. And every time Gosset went to obtain a large enough sample, he met an increasingly irate superior who told him to make do with what he already had.

Or that is how I like to imagine it, at any rate.

So what to do?

Well, what our friend Gosset did is that he came up with a whole new way to solve the problem. I need, he reasoned, reasonably accurate estimates for the population. Plus, khadoos manager says no large samples.

Ergo, he said, new method to solve this problem. Let’s come up with a whole new distribution to solve the problem of talking usefully about population estimates by studying small samples. Let’s have this distribution be a little flatter around the centre, and a little fatter around the tails. That way, I can account for the greater uncertainty given the smaller sample.

And if my manager wants to be a little less khadoos, and he’s ok with me taking a larger sample, well, I’ll make my distribution a little taller around the center, and a little thinner around the tails. A large enough sample, and hell, I don’t even need my new method.

And that, my friends, is how the t-distribution came to be.

You need to know who Gosset was, and why he did what he did, for us to work towards understanding how to resolve Precision and Oomph. But it’s going to be a grand ol’ detour, and we must meet a gentleman, a lady, and many cups of tea before we proceed.

Naman Mishra, a friend and a junior from the Gokhale Institute, was kind enough to read and comment on my post about Abhinav Bindra and the p-value. Even better, he had a little “gift” for me – a post written by somebody else about the p-value:

P values are the probability of observing a sample statistic that is at least as different from the null hypothesis as your sample statistic when you assume that the null hypothesis is true. That’s a pretty convoluted but technically correct definition—and I’ll come back it later on!

https://statisticsbyjim.com/hypothesis-testing/p-values-misinterpreted/

It is convoluted, of course, but that’s not a criticism of the author. It is, instead, an acknowledgement of how difficult this concept is.

So difficult, in fact, that even statisticians have trouble explaining the concept. (Not, I should be clear, understanding it. Explaining it, and there’s a world of a difference).

Well, you have my explanation up there in the Abhinav Bindra post, and hopefully it works for you, but here is the problem with the p-value in terms of not how difficult the concept i, but rather in terms of its limitations:

We want to know if results are right, but a p-value doesn’t measure that. It can’t tell you the magnitude of an effect, the strength of the evidence or the probability that the finding was the result of chance.

https://fivethirtyeight.com/features/not-even-scientists-can-easily-explain-p-values/

In other words, the p-value is not the probability of rejecting the null when it is true. And here’s where it gets really complicated. I myself have in classes told people that the lower the p-value, the safer you should fail in rejecting the null hypothesis! And that’s not incorrect, and it’s not wrong… but well, it ain’t right either.

Consider these two paragraphs, each from the same blogpost:

But also, there’s this, from earlier on in the same blogpost:

“This.”, you can practically hear generation after generation of statistics students say with righteous anger. “This is why statistics makes no sense.”

“Boss, which is it? Can p-values help you reject the null hypothesis, or not?”

Fair question.

Here’s the answer: no.

P-values cannot help you reject the null hypothesis.

…

…

…

You knew there was a “but”, didn’t you? You knew it was coming, didn’t you? Well, congratulations, you’re right. Here goes.

But they’re used to reject the null anyway.

Why, you ask?

Well, because of four people. And because of beer and tea. And other odds and ends, and what a story it is.

And so we’ll talk about beer, and tea and other odds and ends over the days to come.

But as with all good things, let’s begin with the beer. And with the t*!

*I’ve wanted to crack a stats based dad joke forever. Yay.

If you were to watch a cooking show, you would likely be impressed with the amount of jargon that is thrown about. Participants in the show and the hosts will talk about their mise-en-place, they’ll talk about julienned vegetables, they’ll talk about a creme anglaise and a hajjar other things.

But at the end of the day, it’s take ingredients, chop ’em up, cook ’em, and eat ’em. That’s cooking demystified. Don’t get me wrong, I’m not for a moment suggesting that cooking high-falutin’ dishes isn’t complicated. Nor am I suggesting that you can become a world class chef by simplifying all of what is going on.

But I am suggesting that you can understand what is going on by simplifying the language. Sure, you can’t become a world class cook, and sure you can’t acquire the skills overnight. But you can – and if you ask me, you should – understand the what, the why and the how of the processes involved. Up to you then to master ’em, adopt ’em after adapting ’em, or discard ’em. Maybe your paneer makhani won’t be quite as good as the professional’s, but at least you’ll know why not, and why it made sense to give up on some of the more fancy shmancy steps.

Can we deconstruct the process of statistical inference?

Let’s find out.

Let’s assume, for the sake of discussion, that you and your team of intrepid stats students have been hired to find out the weight of the average Bangalorean. Maybe some higher-up somewhere has heard about how 11% of India is diabetic, and they’ve decided to find out how much the average Bangalorean weighs. You might wonder if that is the best fact-finding mission to be sent on, but as Alfred pointed out all those years ago, ours not to reason why.

And so off we go to tilt at some windmills.

Does it make sense to go around with a weighing scale, measuring the weight of every single person we can find in Bangalore?

Nope, it doesn’t. Apart from the obvious fact that it would take forever, you very quickly realize that it will take literally forever. Confused? What I mean is, even if you imagine a Bangalore where the population didn’t change at all from the time you started measuring the weight to the time you finished – even in such a Bangalore, measuring everybody’s weight would take forever.

But it won’t remain static, will it – Bangalore’s population? It likely will change. Some people will pass away, and some babies will be born. Some people will leave Bangalore, and some others will shift into Bangalore.

Not only will it take forever, it will take literally forever.

And so you decide that you will not bother trying to measure everybody’s weight. You will, instead, measure the weight of only a few people. Who are these few people? Where in Bangalore do they stay, and why only these and none other? How do we choose them? Do we pick only men from South Bangalore? Or women from East Bangalore? Only rich people near MG Road? Or only basketball players near National Games Village? Only people who speak Tamil near Whitefield? Only people who have been stuck for the last thirteen months at Silk Board? The ability to answer these questions is acquired when we learn how to do sampling.

What is sampling? Here’s our good friend, ChatGPT:

“Sampling refers to the process of selecting a subset of individuals from a larger population to gather data. In the case of a survey of the sort you’re talking about, you would need to define the target population, which could be the residents of Bangalore city. From this population, you would need to employ a sampling method (e.g., random sampling, stratified sampling) to select a representative sample of individuals whose weights will be measured.”

One sample or many samples? That is, should you choose the most appropriate sampling method and collect only one humongous sample across all of Bangalore city, or many different (and somewhat smaller) samples? This is repeated sampling. Monsieur ChatGPT again:

“You might collect data from multiple samples, each consisting of a different group of individuals. These multiple samples allow you to capture the heterogeneity within the population and account for potential variations across different groups or locations within Bangalore city. By collecting data from multiple samples, you aim to obtain a more comprehensive understanding of the weight situation.”

So all right – from the comfort of your air-conditioned office, you direct your minions to sally forth into Namma Bengaluru, and collect the samples you wish to analyze. And verily do they sally forth, and quickly do they return with well organized sheets of Excel. Each sheet containing data pertaining to a different, well-chosen sample, naturally.

What do you do with all these pretty little sheets of samples? Well, you reach a conclusion. About what? About the average weight of folks in Bangalore. How? By studying the weights of the people mentioned in those sheets.

So, what you’re really doing is you’re reaching a conclusion about the population by studying the samples. This is statistical inference. Our friend again:

“Statistical inference involves drawing conclusions about the population based on the information collected from the sample. Statistical inference helps you make generalizations and draw meaningful conclusions about the larger population using sample data.”

Remember those pretty little Excel sheets that your minions bought back as gifts for you? If you so desire, you can have Excel give you the average weight for each of those samples. Turns out you do so desire, and now you have many different averages in a whole new sheet. Each average is the average of one of those samples, and let’s say you have thirty such samples. Therefore, of course, thirty such averages.

What to do with these thirty averages, you idly wonder, as you lazily swing back and forth in your comfortable chair. And you decide that you will try and see if these averages can’t be made to look like a distribution. Let’s say the first sample has an average weight of sixty five kilograms. And the second one sixty-three kilograms. And the seventh one is sixty-seven kilograms. And the twenty-first is seventy kilograms. Can we arrange these averages in little groups, lightest to the left and heaviest to the right, and draw little sticks to indicate how frequently a particular value occurs?

That’s a sampling distribution.

“A sampling distribution is the distribution of a statistic, such as the mean, calculated from multiple samples. In the context of estimating the average weight of citizens in Bangalore, you would collect weight data from multiple samples of individuals residing in the city. By calculating the mean weight in each sample, you can examine the distribution of these sample means across the multiple samples. The sampling distribution provides insights into the variability of the estimate and helps you assess the precision of your findings regarding the average weight in the population.”

What’s that mean – “the sampling distribution provides insights into the variability of the estimate and helps you assess the precision of your findings regarding the average weight in the population”?

Well, think of it this way. Let’s say you have to report back what the average weight is. That is, what is the average weight, in your opinion, of all of Bangalore. Should you pick the first sample and report it’s value? Or the eighth sample? Or the twenty-third?

Why not, you decide in a fit of inspiration, take the average of all these averages? Whatay idea, sirjee! Because even if you assume that one sample might be a little bit off from the population mean, our father what goes? Maybe the second sample will be a little bit off, but on the other side! That is, the first sample is a little lighter than the actual value. But the second sample might well be a little heavier than the actual value! The average of the two might result in the two errors canceling each other out! And if taking the average of two averages is a good idea, why, taking the average of the thirty averages is positively brilliant.

But hang on a second, you say. Just you wait, you say, for you’re on a roll now. If we can take – and follow me closely here – the average of these averages, well then. I mean to say, why not…

… why not calculate the standard deviation of these averages as well! Not only do we get the average value, but we also get the dispersion, on average, around the average value. Ooh, you genius, you.

This latest invention of yours, it has a name. It’s called the standard error:

“The standard error is a measure of the variability or uncertainty associated with an estimate. In the case of estimating the average weight of citizens in Bangalore, the standard error would quantify the uncertainty surrounding the estimated mean weight. It is typically calculated based on the observed variability in weight within the sample(s) and the information contained in the sampling distribution of the sample means. By considering the spread of the sample means around the population mean, the standard error provides an indication of how much the estimated average weight may deviate from the true population average. A smaller standard error suggests higher precision, indicating that the estimated average weight is expected to be closer to the true population average. Conversely, a larger standard error indicates greater uncertainty and variability, implying that the estimated average weight may have a wider range of potential values and may be less precise.”

Well, not quite. The standard error is actually the standard deviation of the sampling distribution divided by the square root of the number of samples. Ask, ask. Go ahead, ask. Here’s why:

“Imagine you have a larger sample size. With more observations, you have more information about the population, and the estimates tend to be more precise. Dividing the standard deviation by the sample size reflects this concept. It adjusts the measure of variability to match the precision associated with the sample size.”

Larger the sample size, lower the standard error. Also known as ” more data is always better”. Which is why, since time immemorial, every stats prof in the world has always responded to questions about how large your sample size should be with the following statement:

“As large as possible”.

They’re your friends, you see. They wish you to have smaller standard errors.

And so, the sampling distribution gives you the following gifts:

And that, my friends, is the process of statistical inference.

But kahaani, as you might have guessed, abhi baaki hai. We’ll get back to this in a future post.

“Yaar matlab kyon?!”

… is a sentiment expressed by every student who has slogged through an introductory course on statistics. You plow your way through mean, median and mode for the five thousandth time, you nod your head throughout the tedium that is the discussion on the measure of central dispersion, and you get the fact that the sample and population are different things. So far so good.

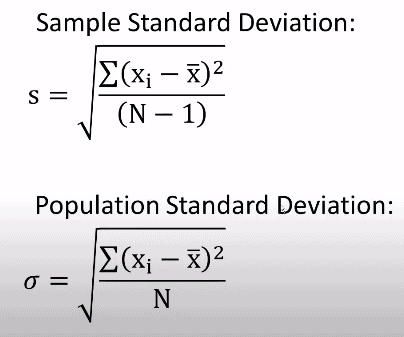

But then the professor plonks down the formula for standard deviation, and for the first time in your life (but not the last! Oh dear me no, anything but last) you see n-1 in the denominator.

And if it isn’t the class immediately after lunch, and if you are attending instead of bunking, there is a non-zero chance that you will, at worst, idly wonder about the n-1. At best, you might raise a timid hand and ask why it is n-1 rather than simply n.

Historically, students in colleges are likely to be met with one of three explanations.

One:

“That’s the formula”. This is why you’re better off bunking rather than attending these kind of classes.

Two:

“You do divide by n, but in the case of the population standard deviation. This is the formula for the sample standard deviation”. You hear this explanation, and warily look around the class for support, for the battle clearly isn’t over. You know that you should be asking a follow-up question. But that much needed support is not forthcoming. Everybody else is studiously noting something of critical importance in their notebooks. “OK, thank you, Sir”, you mumble, and decide that you’re better off bunking more often.

Three:

“Because you lose one degree of freedom, no?”, the professor says, in a manner which clearly suggests that this ought to be bleedin’ obvious, and can we please get on with it. “Ah, yes, of course”, you respond, not even bothering to check for support. And you decide the obvious, but we knew where this was going already, didn’t we?

So what is this “degrees of freedom” business?

Here’s an explanation in three parts.

First, simple thought experiment. Pick any three numbers. Done? Cool.

Now, pick any three numbers such that they add up to ten. Done? Cool.

In the second case, how many numbers were you free to pick? If you say “three”, imagine me standing in front of you, raised eyebrow and all. “Really, three?” I would have said if was actually there. “Let’s say the first number is 5, and the second number is 3. Are you free to pick the third number?”

And you aren’t, of course. If the first number is 5 and the second number is 3 and the three numbers you pick must add up to 10, then the third number has to be…

2. Of course.

But here’s the point. The imposition of a constraint in this little exercise means you’ve lost a degree of freedom.

Here are some examples from your day to day life:

“Leave any time you like, but make sure you get home by tem pm”. Not much of a “leave any time you like” then, is it? Congratulations, you’ve lost a degree of freedom.

“You can buy anything you want, so long as it is less than a thousand rupees”.

“You can do what you like for the rest of the evening, but only after you finish all your homework”.

The imposition of a constraint implies the loss of a degree of freedom.

Got that? That was the first part of the explanation. Now on to the second part.

So ok, you now know what a degree of freedom is. It is the answer to the question “In a n-step process, how many steps am I free to choose?”. Note that this is not a technically correct explanation, and those howls of outrage you hear are statistics professors reading this and going “Dude, wtf!”. But ignore the background noise, and let’s move on.

But why does the sample standard deviation lose a degree of freedom? Why doesn’t the population standard deviation lose a degree of freedom?

Today’s a good day for thought experiments, so let’s indulge in one more. Imagine that you stay in Bangalore, and that you have to take a rickshaw and then the metro to reach your workplace. It takes you about twenty minutes to find a rickshaw, sit in it, swear at Bangalore’s traffic, and reach the metro. If you’re lucky it takes only fifteen minutes, and if you’re unlucky, it takes thirty. But usually, twenty.

It takes you about forty minutes to get into the metro, get off from the metro and walk to your office. If you’re lucky, thirty five minutes, and if you’re unlucky, forty five minutes. But usually, forty.

If reaching on time is of the utmost importance, and you have to reach by ten am, when should you leave home?

Nine am would be risky, right? You’ve left yourself with zero degrees of freedom in terms of potential downsides. If either the rickshaw ride or the metro ride end up going a little bit over the usual, you’ll have a Very Angry Boss waiting for you.

But late night parties are late night parties, snooze buttons are snooze buttons, and here you are at nine am, hoping against hope that things work out fine. But alas, the rickshaw ride ends up taking twenty five minutes.

Now that the rickshaw ride has ended up taking twenty five, you have only thirty five minutes for the metro part of your journey. You used up some degrees of freedom on the first leg of the journey, and you have none left for the second.

Go look at that formula shown up above. Well, both formulas. What does x-bar stand for? Average, of course. Ah, but of the sample or the population? Any student who has ratta maaroed the formulas will tell you that this is the sample average. The population wala thingummy is called “mu”.

Ab, but now hang on. You’re saying that you want to understand what the population standard deviation looks like, and you’re going to form an idea for what it looks like by calculating the sample standard deviation. But the sample standard deviation itself depends upon your idea of what the population mean looks like. And where did you get an idea for what the population mean looks like? From the sample mean, of course!

But what if the sample mean is a little off? That is, what if the sample mean isn’t exactly like the population mean? Well, let’s keep one data point with us. If it turns out to be off, we’ll have that last data point be such that when you add it to the calculation of the sample mean, we will guarantee that the answer is eggjhactlee equal to the population mean. Hah!

Well ok, but that does then mean that you have… drumroll please… lost one degree of freedom.

In much the same way that taking more time on the rickshaw leg means you can’t take time on the metro leg…

Keeping one datapoint in hand when it comes to the mean implies that you lose that one degree of freedom when it comes to the sample standard deviation.

And that is why you have n-1 degrees of freedom.

I said three parts, remember? So what’s the third bit? If you think you’ve understood what I’ve said, go find someone to explain it to, and check if they get it. Only then, as <insert famous meme of your choice here> says, have you really understood it.

How would you explain what the p-value is to somebody who is new to statistics?

There is an argument to be made about whether the p-value should be explained at all, regardless of how well you know statistics. And we will get to those ruminations too. But for the moment, think about the fact that you want to get a person familiar with how statistics is “done” today. This person doesn’t wish to become a professional statistician, but is very much a person who is interested in statistics.

Maybe because they have team members who work in this area, and this person would like to understand what they’re talking about in their presentations. Maybe because they are interested in statistics, but in a passively curious fashion (the very best kind, if you ask me). Maybe because it is a part of their coursework. Maybe because they’re training to be economists/psychologists/sociologists/anthropologists/insert-profession-here, and statistics is something they ought to be familiar with. Whatever the reason, they want to know how to think about statistics, and they want to know how to understand the output of various statistical programmes.

And sooner or later, this person will come across the word “p-value”. Or maybe they will see “Significance” in some statistical software’s output. Or worse, “sig.” And they might want to know, what is this p-value? This blogpost is for folks who’ve asked this question.

Here’s how I explain the intuition behind p-values to my students in statistics classes.

“Imagine”, I tell them, “that Abhinav Bindra is standing at the back of the class”.

Memories are fickle, and increasingly, over the last three to four years, I then have to explain who Abhinav Bindra is.

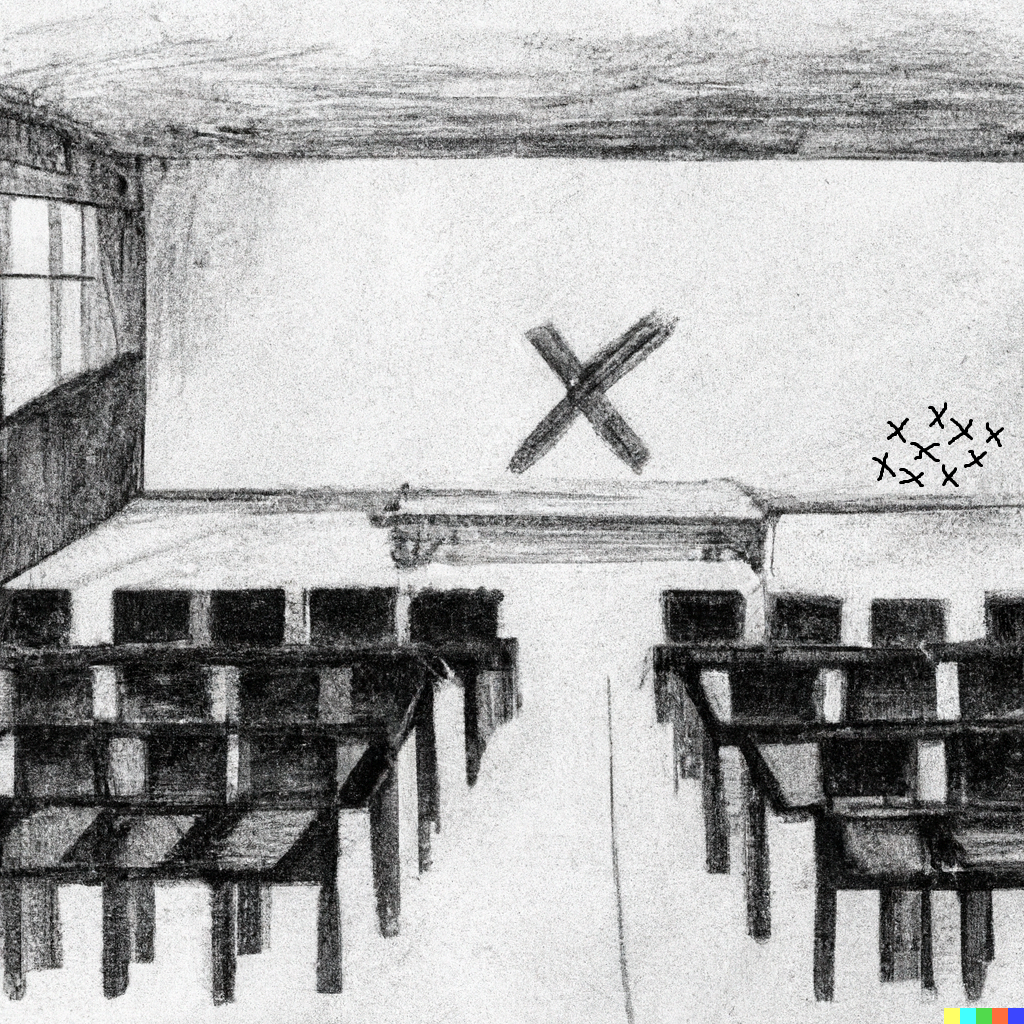

“So, ok”, I continue, once we’re back on track, “Abhinav is standing at the back of the class. He’s got his rifle with him, and he’s going to aim at this “x” that I’ve drawn on the board.

And so Abhinav begins to shoot, aiming at the large x. But something interesting happens. Instead of hitting the large X, as one would expect from a world-class shooter, he ends up aiming almost exclusively towards the right of the blackboard. Like so:

Those “x”-es that you see towards the right are to be thought of as bullet holes, and I will not be taking any questions about my artistic skills. The first picture was created by Dall-E, and the second picture may or may not have been edited by me in MS-Paint. Like I said, no further questions.

So, anyways, we should all be a little bit gobsmacked, right? Here we are, hoping to watch a world-class rifle shooter at work, and he ends up shooting most of the times very, very far away from the intended target.

What should we make of this fact?

Let’s think about this. We know, from having seen our fair share of these competitions on TV and on the internet, that a world class champion really should be shooting better. We know that conditions inside the classroom aren’t so windy that it might be a factor. We know that he isn’t distracted, we know that his rifle is working well, and we know that the classroom isn’t so big as for it to be a problem.

Well, we don’t “know” all of these things, but I’m going to assume them to be true, and I invite you to join me in this little thought experiment.

Then what do we conclude about the fact that all the little “x”‘es are very far away from the big “X”?

Maybe – and we all have a suspicious gleam in our eyes as we turn in accusing fashion at the rifle shooter – this guy at the back of our classroom isn’t Abhinav Bindra, but some impostor?

That is, so unlikely is the data in front of our eyes, that we have no choice but to question our assumptions. The data, you see, is there – right there – in front of your eyes. You’ve checked and rechecked those little x’es, and you’ve eliminated all other possible explanations. And so you’re left with but one inescapable conclusion.

So far away are the little x’es from the big X, that we can’t help but declare the guy to be an impostor.

“The p-value is the probability of getting a result as extreme as (or more extreme than) what you observed, given that the null hypothesis is true.”

Those little x’es, they’re very extreme. If we assume that it is Abhinav Bindra, what is the probability that he will shoot that far away from the intended target? 50% probability that he will aim shoot that badly? 30%? 20%? 10%? Less than 5%?

And if the probability that he will shoot that badly is less than 5%, then maybe we shouldn’t be assuming that it is Abhinav Bindra in the first place?

The TMKK of the p-value is that it gives us a way to reject the null hypothesis. Why do we need to reject the null hypothesis? We don’t “need” to reject the null hypothesis, but keep in mind that we usually formulate the null hypothesis to mean that there has been “no impact”. That rain has “no impact” on crops. That Complan has “no impact” on the growth of heights. That a new pill has “no impact” upon the health of an individual. That this blogpost has had “no impact” on your understanding of the p-value.

And we design an experiment in which we see if crops grow after it rains, if heights grow after kids have Complan, if people feel better after they take that pill, and if you guys now think you’ve got a handle on what the p-value is, after having read all this.

Designing an experiment is a separate hell in and of itself, and we’ll take a trip down that rabbit hole later.

But in each of these experiments, if we see that crops have sprouted magnificently post the rains, if we see that kids have shot up like beanstalks after they’ve had Complan, if people have made miraculous recoveries after taking that pill, and if we see EFE readers strut around like peacocks around stats professors – well then, what should we conclude?

We should conclude that our null hypothesis was wrong.

A low enough p-value allows us to be reasonably confident that our null hypothesis wasn’t correct, and that we should reject it.

Got that?

Congratulations, that’s the good news.

The bad news?

It’s much more complicated than that!