The 'Institutional Yes' at Amazon. Bosses are supposed to say Yes to new ideas. If they reject, they have to write a two-page thesis explaining why it is a bad idea. AWS was born out of the Institutional Yes. Harvard Business Review (2007) article on this: https://t.co/V3SCinItTm pic.twitter.com/XNwwr0Zunc

— Sachin Kalbag (@SachinKalbag) July 22, 2023

Tag: null hypothesis

The Gift That Keeps on Giving: The p-value

Naman Mishra, a friend and a junior from the Gokhale Institute, was kind enough to read and comment on my post about Abhinav Bindra and the p-value. Even better, he had a little “gift” for me – a post written by somebody else about the p-value:

P values are the probability of observing a sample statistic that is at least as different from the null hypothesis as your sample statistic when you assume that the null hypothesis is true. That’s a pretty convoluted but technically correct definition—and I’ll come back it later on!

https://statisticsbyjim.com/hypothesis-testing/p-values-misinterpreted/

It is convoluted, of course, but that’s not a criticism of the author. It is, instead, an acknowledgement of how difficult this concept is.

So difficult, in fact, that even statisticians have trouble explaining the concept. (Not, I should be clear, understanding it. Explaining it, and there’s a world of a difference).

Well, you have my explanation up there in the Abhinav Bindra post, and hopefully it works for you, but here is the problem with the p-value in terms of not how difficult the concept i, but rather in terms of its limitations:

We want to know if results are right, but a p-value doesn’t measure that. It can’t tell you the magnitude of an effect, the strength of the evidence or the probability that the finding was the result of chance.

https://fivethirtyeight.com/features/not-even-scientists-can-easily-explain-p-values/

In other words, the p-value is not the probability of rejecting the null when it is true. And here’s where it gets really complicated. I myself have in classes told people that the lower the p-value, the safer you should fail in rejecting the null hypothesis! And that’s not incorrect, and it’s not wrong… but well, it ain’t right either.

Consider these two paragraphs, each from the same blogpost:

But also, there’s this, from earlier on in the same blogpost:

“This.”, you can practically hear generation after generation of statistics students say with righteous anger. “This is why statistics makes no sense.”

“Boss, which is it? Can p-values help you reject the null hypothesis, or not?”

Fair question.

Here’s the answer: no.

P-values cannot help you reject the null hypothesis.

…

…

…

You knew there was a “but”, didn’t you? You knew it was coming, didn’t you? Well, congratulations, you’re right. Here goes.

But they’re used to reject the null anyway.

Why, you ask?

Well, because of four people. And because of beer and tea. And other odds and ends, and what a story it is.

And so we’ll talk about beer, and tea and other odds and ends over the days to come.

But as with all good things, let’s begin with the beer. And with the t*!

*I’ve wanted to crack a stats based dad joke forever. Yay.

Abhinav Bindra and the p-value

How would you explain what the p-value is to somebody who is new to statistics?

There is an argument to be made about whether the p-value should be explained at all, regardless of how well you know statistics. And we will get to those ruminations too. But for the moment, think about the fact that you want to get a person familiar with how statistics is “done” today. This person doesn’t wish to become a professional statistician, but is very much a person who is interested in statistics.

Maybe because they have team members who work in this area, and this person would like to understand what they’re talking about in their presentations. Maybe because they are interested in statistics, but in a passively curious fashion (the very best kind, if you ask me). Maybe because it is a part of their coursework. Maybe because they’re training to be economists/psychologists/sociologists/anthropologists/insert-profession-here, and statistics is something they ought to be familiar with. Whatever the reason, they want to know how to think about statistics, and they want to know how to understand the output of various statistical programmes.

And sooner or later, this person will come across the word “p-value”. Or maybe they will see “Significance” in some statistical software’s output. Or worse, “sig.” And they might want to know, what is this p-value? This blogpost is for folks who’ve asked this question.

Here’s how I explain the intuition behind p-values to my students in statistics classes.

“Imagine”, I tell them, “that Abhinav Bindra is standing at the back of the class”.

Memories are fickle, and increasingly, over the last three to four years, I then have to explain who Abhinav Bindra is.

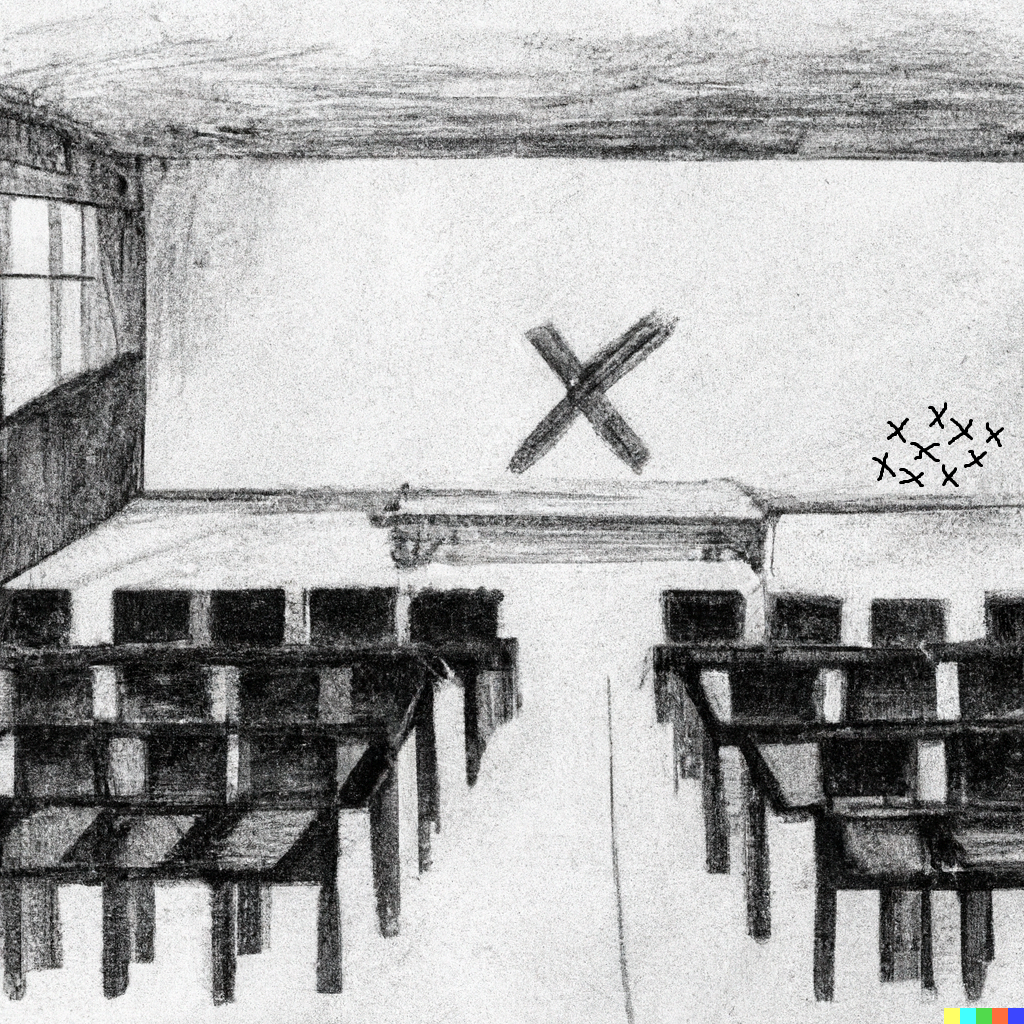

“So, ok”, I continue, once we’re back on track, “Abhinav is standing at the back of the class. He’s got his rifle with him, and he’s going to aim at this “x” that I’ve drawn on the board.

And so Abhinav begins to shoot, aiming at the large x. But something interesting happens. Instead of hitting the large X, as one would expect from a world-class shooter, he ends up aiming almost exclusively towards the right of the blackboard. Like so:

Those “x”-es that you see towards the right are to be thought of as bullet holes, and I will not be taking any questions about my artistic skills. The first picture was created by Dall-E, and the second picture may or may not have been edited by me in MS-Paint. Like I said, no further questions.

So, anyways, we should all be a little bit gobsmacked, right? Here we are, hoping to watch a world-class rifle shooter at work, and he ends up shooting most of the times very, very far away from the intended target.

What should we make of this fact?

Let’s think about this. We know, from having seen our fair share of these competitions on TV and on the internet, that a world class champion really should be shooting better. We know that conditions inside the classroom aren’t so windy that it might be a factor. We know that he isn’t distracted, we know that his rifle is working well, and we know that the classroom isn’t so big as for it to be a problem.

Well, we don’t “know” all of these things, but I’m going to assume them to be true, and I invite you to join me in this little thought experiment.

Then what do we conclude about the fact that all the little “x”‘es are very far away from the big “X”?

Maybe – and we all have a suspicious gleam in our eyes as we turn in accusing fashion at the rifle shooter – this guy at the back of our classroom isn’t Abhinav Bindra, but some impostor?

That is, so unlikely is the data in front of our eyes, that we have no choice but to question our assumptions. The data, you see, is there – right there – in front of your eyes. You’ve checked and rechecked those little x’es, and you’ve eliminated all other possible explanations. And so you’re left with but one inescapable conclusion.

So far away are the little x’es from the big X, that we can’t help but declare the guy to be an impostor.

“The p-value is the probability of getting a result as extreme as (or more extreme than) what you observed, given that the null hypothesis is true.”

Those little x’es, they’re very extreme. If we assume that it is Abhinav Bindra, what is the probability that he will shoot that far away from the intended target? 50% probability that he will aim shoot that badly? 30%? 20%? 10%? Less than 5%?

And if the probability that he will shoot that badly is less than 5%, then maybe we shouldn’t be assuming that it is Abhinav Bindra in the first place?

The TMKK of the p-value is that it gives us a way to reject the null hypothesis. Why do we need to reject the null hypothesis? We don’t “need” to reject the null hypothesis, but keep in mind that we usually formulate the null hypothesis to mean that there has been “no impact”. That rain has “no impact” on crops. That Complan has “no impact” on the growth of heights. That a new pill has “no impact” upon the health of an individual. That this blogpost has had “no impact” on your understanding of the p-value.

And we design an experiment in which we see if crops grow after it rains, if heights grow after kids have Complan, if people feel better after they take that pill, and if you guys now think you’ve got a handle on what the p-value is, after having read all this.

Designing an experiment is a separate hell in and of itself, and we’ll take a trip down that rabbit hole later.

But in each of these experiments, if we see that crops have sprouted magnificently post the rains, if we see that kids have shot up like beanstalks after they’ve had Complan, if people have made miraculous recoveries after taking that pill, and if we see EFE readers strut around like peacocks around stats professors – well then, what should we conclude?

We should conclude that our null hypothesis was wrong.

A low enough p-value allows us to be reasonably confident that our null hypothesis wasn’t correct, and that we should reject it.

Got that?

Congratulations, that’s the good news.

The bad news?

It’s much more complicated than that!

Bibek Debroy on loopholes in the CPC

That’s the Civil Procedure Code.

The average person will not have heard of Dipali Biswas or Nirmalendu Mukherjee and may not be aware of the case decided by the Supreme Court on October 5, 2021. The case was decided by a division bench, consisting of Hemant Gupta and V Ramasubramanian and the judgment was authored by Justice V Ramasubramanian. Justice Ramasubramanian observed (not part of the judgment), “Not to be put off by repeated failures, the appellants herein, like the tireless Vikramaditya, who made repeated attempts to capture Betal, started the present round and hopefully the final round.” Other than smiling about a case that took 50 years to be resolved and making wisecracks about “tareekh pe tareekh”, shouldn’t we be concerned about rules and procedures (all in the name of natural justice) that permit a travesty of justice?

https://indianexpress.com/article/opinion/columns/civil-procedure-code-loopholes-justice-delay-7617291/

I know (alas) next to nothing about the law, but there were two excerpts in this article that I wanted to highlight as a student of statistics and economics. We’ll go with statistics first.

Whenever I start to teach a new course, I always tell my students that there are two kinds of errors I can make. I can either make sure that I complete the syllabus, irrespective of whether everybody has understood it or not. Or I can make sure that everybody has understood whatever I have taught, irrespective of whether the syllabus is completed or not. Speed versus thoroughness, if you will – and both cannot be optimized for at the same time. If you’re wondering, I prefer to err on the side of making sure everybody has understood, even if it comes at the cost of an incomplete syllabus.

This is, of course, closely related to formulating the null hypothesis and asking which type of error one would rather avoid. And the reason I bring it up, is because of this exceprt:

Innumerable judgments have quoted the maxim, “justice hurried is justice buried”. By the same token, justice tarried is also justice buried and inordinate delays mean the legal system doesn’t provide adequate deterrence to mala fide action. In my view, for most civil cases, the moment issues are framed, one can predict the outcome within a range, with a reasonable degree of certainty. (Obviously, I don’t mean constitutional cases before the Supreme Court.) With no disrespect to the legal system, I think AI (artificial intelligence) is capable of delivering judgments in such cases, freeing court time for non-trivial cases.

https://indianexpress.com/article/opinion/columns/civil-procedure-code-loopholes-justice-delay-7617291/

“Justice hurried is justice buried” and “Justice tarried is justice buried” are both problems, and optimizing for one means not optimizing for the other. What Bibek Debroy is saying here is that what we have ended up choosing to optimize for the former. We make sure that every case has the opportunity to be heard at great length, and with sufficient maneuvering room for both parties.

And that’s great, but the opportunity cost is the fact that sometimes judgments can take over fifty years (and counting!).

And what is Bibek Debroy’s solution? When he suggests that AI is capable of delivering judgments in such cases, he is not saying that the AI will give a perfect judgment every time. He is not even saying that one should use AI (I think the point is rhetorical, although of course I could be wrong). He is saying that the gains in efficiency are worth the occasional case being incorrectly judged. In other words, he is optimizing for justice tarried is also justice buried – he would rather avoid the error of taking up too much time for each case, and would (presumably) be fine paying the price of having the occasional case being misjudged.

It is up to you to agree or disagree with him, or with me when it comes to how I conduct classes. But all of us should be cognizant of the opportunity costs when we decide which error we’d rather avoid!

And economics second:

Litigants and lawyers (at least on one side of a civil case) have no incentive to finish a case fast (Does the judiciary have it?).

https://indianexpress.com/article/opinion/columns/civil-procedure-code-loopholes-justice-delay-7617291/

This is more of a question (or rumination) on my part – what are the incentives of the judiciary? I can imagine scenarios in which those “on one side of a civil case” can use both official rules and underhanded stratagems to delay the eventual judgment. And since there is no incentivization in terms of speedier resolutions, are we just left with a system that is geared towards moving along ponderously forever more?

And if so, how might this be changed for the better? This is, and I’m not joking, (more than) a trillion dollar question.

And finally, as a bonus, culture:

My friend Murali Neelakantan makes the point here that isn’t really about incentive design at all, that the problem is more rooted in how we, the people of India, use and abuse the provisions of the CPC.

That takes me into even deeper and ever more unfamiliar waters, so I shall think more about this before trying to write about it!

Team “Kam Nahi Padna Chahiye”

Every time we host a party at our home, we engage in a brief and spirited… let’s go with the word “discussion”.

Said discussion is not about what is going to be on the menu – we usually find ourselves in agreement about this aspect. It is, instead, about the quantity.

In every household around the world, I suppose, this discussion plays out every time there’s a party. One side of the debate will worry about how to fit in the leftovers in the refrigerator the next day, while the other will fret about – the horror! – there not being enough food on the table midway through a meal.

There is, I should mention, no “right” answer over here. Each side makes valid arguments, and each side has logic going for it. Now, me, personally, I quite like the idea of leftovers, because what can possibly be better than waking up at 3 in the morning for no good reason, waddling over to the fridge, and getting a big fat meaty slice of whatever one may find in there? But having been a part of running a household for a decade and change, I know the challenges that leftovers can pose in terms of storage.

You might by now be wondering about where I am going with this, but asking yourself which side of the debate you fall upon when it comes to this specific issue is also a good way to understand why formulating the null hypothesis can be so very challenging.

Let’s assume that there’s going to be four adults and two kids at a party.

How many chapatis should be made?

Should the null hypothesis be: We will eat exactly 16 chapatis tonight

With the alternate then being: 16 chapatis will either be too much or too little

Or should the null hypothesis be: We will eat 20 chapatis or more

With the alternate being: We will definitely eat less than 20 chapatis tonight.

The reason we end up having a “discussion” is because we can’t agree on which outcome we would rather avoid: that of potentially being embarrassed as hosts, or the one of standing, arms exasperatedly akimbo, in front of the refrigerator post-party.

It is the outcome we would rather avoid that guides us in our formation of the null hypothesis, in other words. We give it every chance to be true, and if we reject it, it is because we are almost entirely confident that we are right in rejecting it.

What is “almost entirely“?

That is the point of the “significant at 1%” or “5%” or “10%” sentence in academic papers.

Which, of course, is another way to think about it. This set of the null and the alternate…

H0: We will eat 20 chapatis or more

Ha: We will eat less than 20 chapatis

… I am not ok rejecting the null at even 1%. Or in the language of statistics, I am not ok with committing a Type I error, even at a probability (p-value) of 1%.

A Type I error is rejecting the null when it is true. So even a 1% chance that we and our guests would have wanted to eat more than 20 chapatis* to me means that we should get more than 20 chapatis made.

At this point in our discussions (we’re both economists, so these discussions really do take place at our home), my wife exasperatedly points out that not once has the food actually fallen short.

Ah, I say, triumphantly. Can you guarantee that it won’t this time around? 100% guarantee?

No? So you’re saying there’s a teeny-tiny 1% chance that we’ll have too few chapatis?

Well, then.

Boss.

Kam nahi padna chahiye!

*Don’t judge us, ok. Sometimes the curry just is that good.

What do Income Tax Returns, Demonetization, and Fast Tag have in common?

It may help to read last Thursday’s post before you start reading this one.

Why are there such long lines at all the toll plazas across India at the moment? You may give a lot of answers, and if you have recently passed through a toll plaza yourself, your answer may well be unprintable.

Here’s mine though: you are, currently, assumed guilty until proven innocent.

All cars must wait in line, pay cash/have the RFID tag scanned, and for each car, once the payment is done, the barrier is raised, and you may pass through. The barrier stays put until the verification is done: that’s another way of saying guilty until proven innocent.

But the cool thing, to me, about implementing Fast Tag, is that once a certain percentage of vehicles in India is equipped with Fast Tags, the barriers can stay up. We will transition to a regime in which all vehicles are assumed to be innocent.

Now, as we learnt the previous week, with a large sample, there will be problems. In the new systems, in which vehicles just pass through because we assume all of them have Fast Tag implemented, there will be exceptions. There will be vehicles that don’t, in fact, have Fast Tag implemented, and so they may end up not paying the toll.

But the vast majority will have Fast Tag, and don’t have to pay with money and waiting time. The government will miss out on catching a few bad apples, but a lot of Indians will save a lot of time. On balance, everybody wins.

And of course, given technology, it should be possible to have notifications sent to those vehicles that pass through without paying. Yes, I know it seems a long way off right now, but the point is that as a statistician, we move to a world where we assume all vehicles are innocent until proven guilty, rather than the other way around.

Fast Tag implementation, when fully functional, will get the null hypothesis right.

And pre-filled income tax returns, sent to us by the government, with minimum of audits and notices, is exactly the same story. The government assumes innocence until proven otherwise, leading to a system in which every tax-paying Indian is assumed to be an honest tax-payer until proven otherwise. We already have a system that is closer to this ideal than was the case earlier, and hopefully, it will become better still with time.

And now that we’re on a roll, that’s the problem with demonetization, if you were to ask a statistician! All notes were presumed guilty, until proven innocent.

Here’s the point: if you are a student of statistics, struggling with the formation of the null, and wondering what the point is anyways*, the example from last Thursday and the three noted above should help make the topic more relatable.

And to the extent that it does, statistics becomes more relatable, more understandable and – dare I say it – fun!

*Trust me, we’ve all been there

Statistics and the NRC

Imagine the following: you are the judge who has been hearing a case in which somebody has been accused of murder, and you go to sleep one night at the end of the trial, knowing that you must give your judgment tomorrow.

God appears in your dream, and tells you that he is displeased with you. As punishment, he decrees that whatever judgment you make tomorrow will be wrong. If you say that the accused didn’t commit the murder, then he did in fact commit the murder. If you say that the accused did commit the murder, then he didn’t in fact commit the murder. He’s god, so he gets to have all of this be true.

You wake up from your sleep convinced that this wasn’t a dream, and what god said will actually happen. When you announce your judgment, whatever you say is going to be wrong.

Would you rather send an innocent man to jail, or would you rather send a guilty man free? Remember, it must be one of the two. Don’t take a peek at what follows, and try and answer this question before you read further.

My bet is that you likely chose to send a guilty man free. I have been using a variant of this exercise for years in my classes on statistics, and there appears to be something within us that rebels at the idea of sending an innocent man to jail. One reason, maybe, that explains the enduring popularity of the Shawshank Redemption.

It is at least partially for this reason that we say innocent until proven guilty. That should be, for reasons outlined above, the null hypothesis. We give it every chance to be true, and assure ourselves that the chance we’re wrong is small enough to feel safe in declaring the defendant guilty (note to statistics students: that’s one way of understanding the p-value right there)

So what does this have to do with the NRC?

Well, if you were one of the officials charged with designing this scheme, what would you say the hypothesis should be about, say, me? Indian until proven otherwise, or not Indian until proven Indian?

Like the judge, you can end up making two errors. Declaring me as not an Indian when I am, in fact, an Indian. Or declaring me as an Indian when I am, in fact, not an Indian.

To me, personally, declaring an Indian to not be an Indian is morally more problematic than declaring a non-Indian to be an Indian. And therefore my answer to my own question would be that the hypothesis ought to be Indian until proven otherwise.

But the NRC is, of course, designed exactly the other way around. Everybody is assumed to not be an Indian until proven otherwise. The burden of proof rests on the defense, not the prosecution. We are assumed guilty until proven innocent.

Not only is this problematic for reasons stated above, it will also mean that we minimize the chance of mistakenly declaring someone to be Indian. Now, one may view that as a good thing, but the price we pay is the following:

We can’t control for the other kind of error. We lose control over the chance of mistakenly declaring somebody as a non-Indian.

And given that there’s 1,300,000,000 of us (and counting), there will be a lot of Indians who will mistakenly be identified as non-Indian.

Viewed this way, the CAA is potentially a useful tool to undo the inevitable errors that will occur.

And what has a lot of people upset (myself included) is the fact that the CAA has, to the extent that I understand it, the power to undo the errors the NRC in its current form will commit, but contingent on religious faith.

I being upset about this is me expressing my opinion, and your opinion might be the same, or it might be different – and that’s fine! Debate is an awesome way to learn.

But everything that preceded the last paragraph is not opinion. If the NRC is formulated the way it is described above, there will be far too many Indians who end up being classified as non-Indians.

If the NRC is not formulated the way it is described above, then the government needs to, 1.3 billion times, try us – in the legal sense – assuming we’re Indians, and they need to prove otherwise. To say that this will be expensive, and beyond our existing state capacity, is obvious.

There are many reasons to be against the NRC (if and when it will be implemented). But this post isn’t about my opinion about the NRC as an Indian. It is about my view of the NRC as a statistical exercise.

And as a statistician, there can be only one view of the NRC: it fails the most basic criteria.

It gets the null hypothesis wrong.

..

..

..

The link to Shruti Rajagopalan’s article, which served as inspiration for this post.

My thanks to Prof. Alex Tabbarok for making this essay much, much more readable than it was at the outset (imagine!), and to Prof. Pradeep Apte for reminding me of the concept of falsifiability, which we’ll get to next Thursday.

Links for 27th March, 2019

- “As a program adapts and serves more people and more functions, it naturally requires tighter regulation. Software systems govern how we interact as groups, and that makes them unavoidably bureaucratic in nature. There will always be those who want to maintain the system and those who want to push the system’s boundaries. Conservatives and liberals emerge.”

Here’s a useful thumb-rule. Read anything written by Atul Gawande. In this article, he speaks, nominally, about the difficulty of adapting to a new computer system that is being foisted upon the medical community. But there’s much more to unpack here! Adapting to systems, mutations within systems, the difficulty of scaling, substitutes and complements, opportunity costs – and much, much more. - “Pig facial recognition works the same way as human facial recognition, the companies say. Scanners and software take in the bristles, the snout, the eyes and ears. The features are mapped. Pigs don’t all look alike when you know what to look for, they said.”

The intersection of technology, pork and the culture that is China today. Some might call this dystopian, others might fret at how slow progress is – but the article is fascinating. - “The level of u* is not fixed. It changes over time, driven by changes in labor laws, the minimum wage, government benefit programs, demographics and technology. For instance, u* might decline if workers, on average, are older; older workers are less likely to be unemployed. The level of u* might rise if unemployment benefits become more generous and this leads unemployed workers to be more picky about taking jobs.”

NAIRU – or the Non-Accelerating Inflation Rate of Unemployment, was one of the more nerdy acronyms I learnt when I was a student. This article does a good job of explaining exactly what this is, and why it matters. And most importantly, it does so in a way that isn’t confusing for the layperson. - “These calculations make clear why economists so often argue against light rail and subway construction projects. They are so expensive that ridership can only begin to cover construction and maintenance costs if the systems operate at close to their physical capacity most of the time; that is, if there are enough riders to fill up the cars when they run on two- to three-minute headways for many hours per day. Since most proposed projects do not meet this standard, economists generally argue against them. Buses can usually move the projected numbers of riders at a fraction of the cost.”

I am, and probably always will be, a huge fan of buses over other forms of public transport. And I will always be a big fan of public transport over private transport. This article explains why not just I, but other economists will also tend to favor buses over other forms of public transport. - “The principle that you are presumed to be innocent unless and until you are convicted, after a fair trial, turns out, in practice, to be a different principle altogether: for the purposes of compensation, once you are convicted your conviction is deemed to be correct. You are presumed guilty for the rest of your life, irrespective of whether your trial was fair or unfair. It makes no difference that your conviction has been quashed. It makes no difference that new evidence – which ought to have been obtained by the police before your trial – shows that you are probably innocent. Those acting on behalf of the state may have bungled the investigation, and possibly even bent the rules to get you convicted. None of that is of any consequence. All that matters is whether you can prove that you suffered a “miscarriage of justice:” ”

I teach statistics, and would happily spend an entire semester explaining how to frame the null, and more importantly, hot to not frame the null. This article does an excellent job of providing an all too important example of the latter.